MAE148-Autonomous Car Project

an Autonomous Driving Project

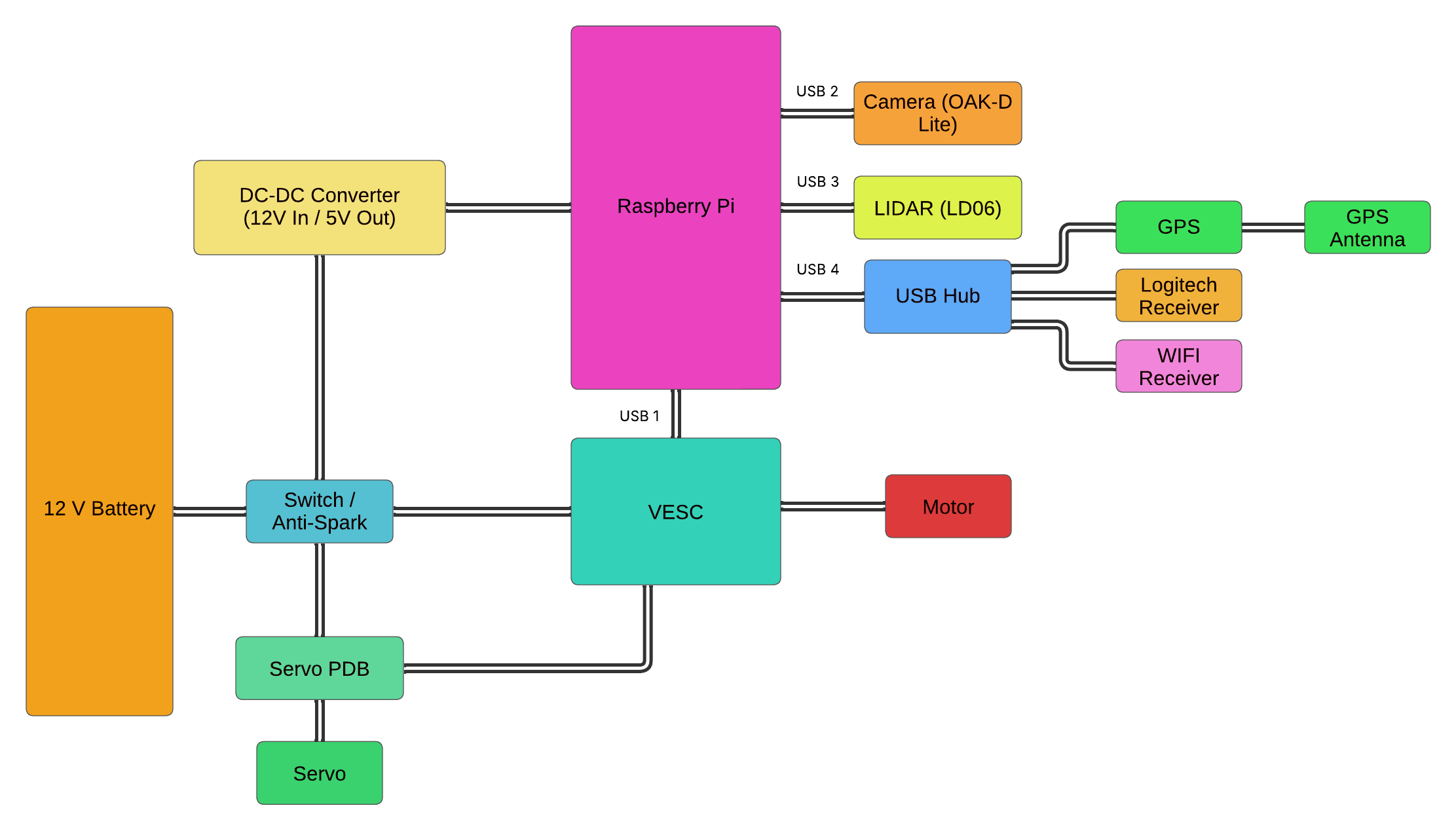

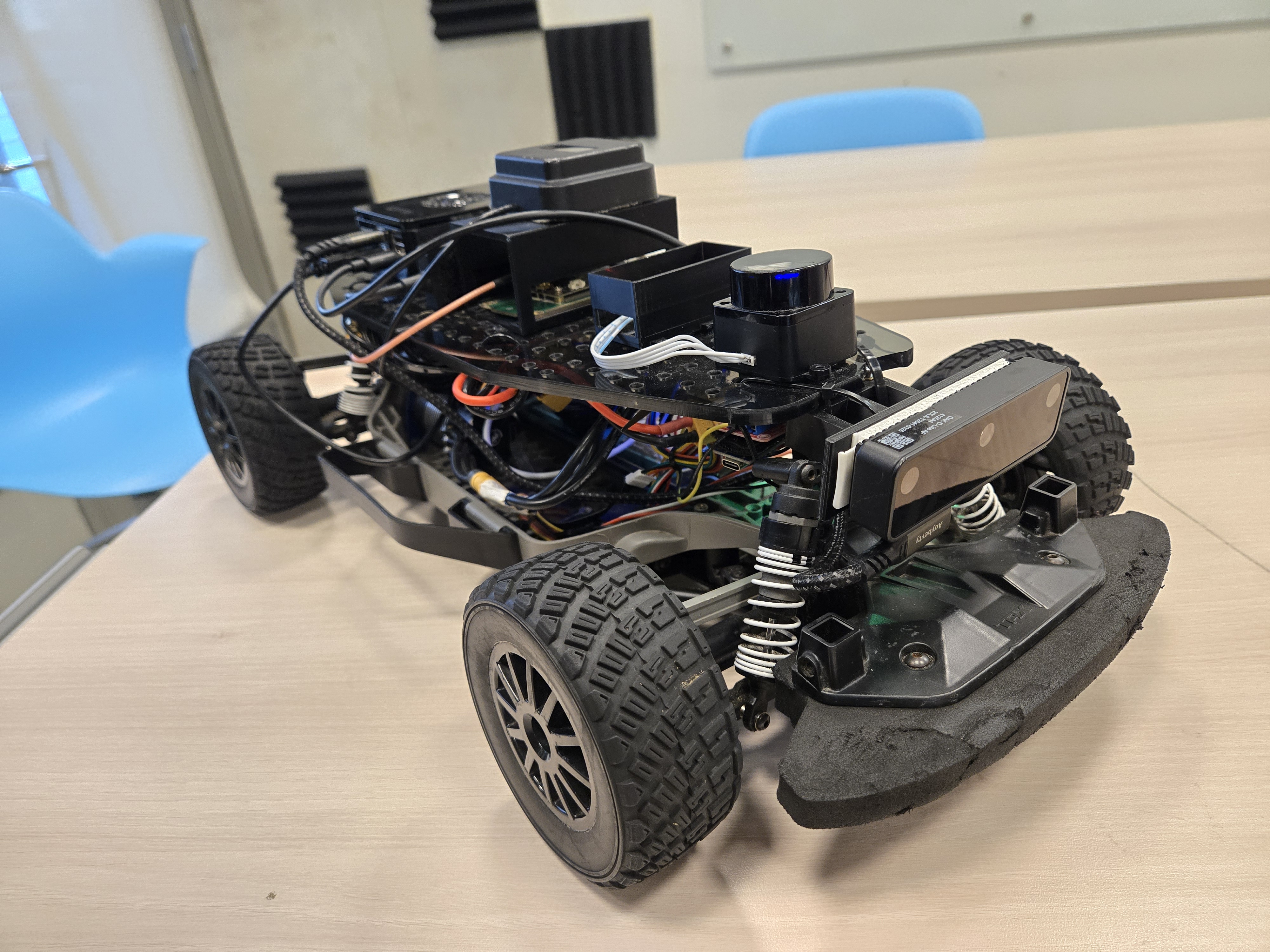

In this project, my teammates and I built an autonomous car from scratch. Below is a diagram illustrating the car’s construction and appearance:

Through this project, we trained the car to accomplish several tasks, including:

- Completing autonomous laps using the DonkeyCar KerasCNN model (input: RGB images; output: throttle and steering angle)

- Completing autonomous laps using GPS

- Performing autonomous lane-following laps using OpenCV (cv2)

Final proposed task: automatically detecting and reaching a broken-down car

-

Setup: We used a surveillance camera to monitor the road. The car was equipped with both lidar and a camera. When the surveillance camera detected a blinking hazard light, it signaled the car to search for the broken-down vehicle and park behind it to provide assistance.

-

Solutions:

- The surveillance camera used a blinking light detection model developed with OpenCV (cv2). When a hazard light was detected, a signal was sent to the car via a ROS2 topic.

- The car used an object detection model served through Roboflow to identify the broken-down car and employed a PID control algorithm to approach it.

- Lidar was used to determine the distance to the target. A basic sensor fusion technique was implemented to accurately map the object detected by the camera to the lidar output.

Final project demo video:

Resources

References

-

MAE 148 course - Introduction to Autonomous Vehicle course.

-

DonkeyCar - An open source Self Driving Car Platform.

-

Roboflow - A platform to build and deploy computer vision applications.